Let's go through an example to see how the platform works. For the source data, we will use open data from the Department of Buildings (DOB), which issues permits for construction and demolition activities in New York City.

Configure data ingestion

Data can be loaded into the application in two ways: manually or automatically.

Automatic data transfer is facilitated by the Airbyte service. You need to navigate to Airbyte UI and create a new connection by linking a data source using Airbyte's built-in connectors or by creating a custom connector. Additionally, define the destination where you want to transfer the data, Azure Blob Storage in our case. Once the connection is established and data synchronization is configured, you can proceed to work in the application.

For more information, read the detailed instruction.

Airbyte will automatically create a dataset in the Raw zone and load a file with data from the specified source. Open the application and edit the dataset settings to configure headers if needed.

Follow the link to get more familiar the Code Editor page

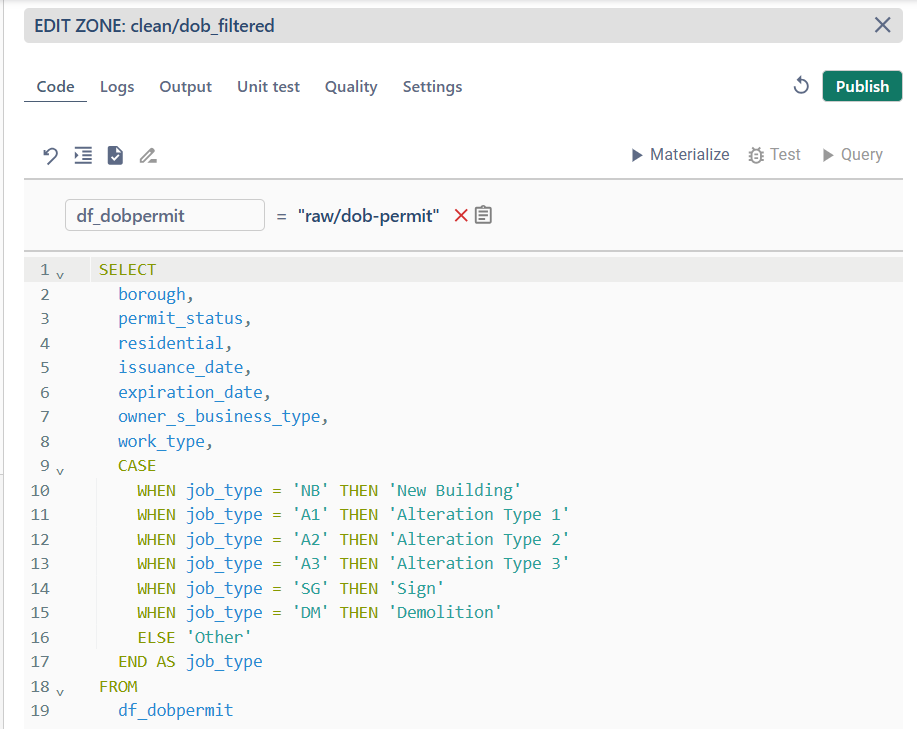

Define logical datasets

In this step, we prepare the data for further analysis by removing unnecessary columns, renaming them, and transforming specific values to enhance their relevance and usability. The process involves three main steps:

- Create new dataset in Clean zone

- Add a source and transformation code (SQL or PySpark) to clean the data

- Materialize and Publish

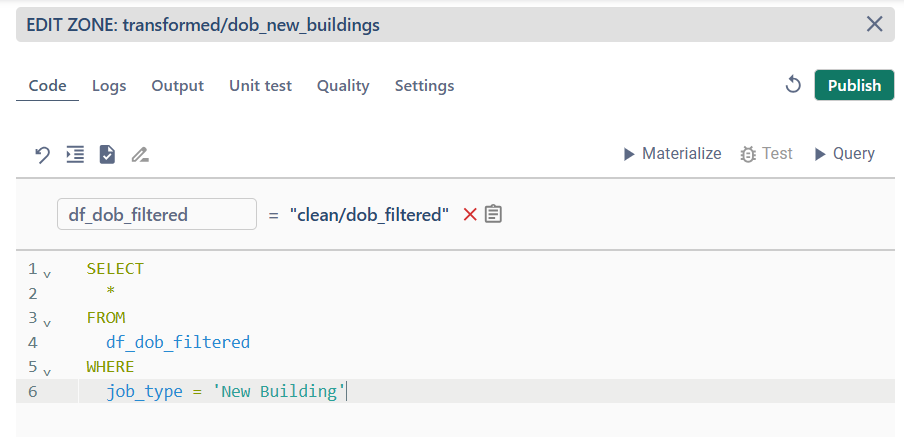

Develop data transformations

In this step, you refine and transform your data to prepare it for specific analytical purposes. By applying filters, aggregations, or other transformation logic, you can derive insights from your datasets. Follow these steps to develop data transformations effectively:

- Create new dataset in Transform zone

- Add a source and transformation code to filter data for analysis (SQL or PySpark)

- Materialize and Publish

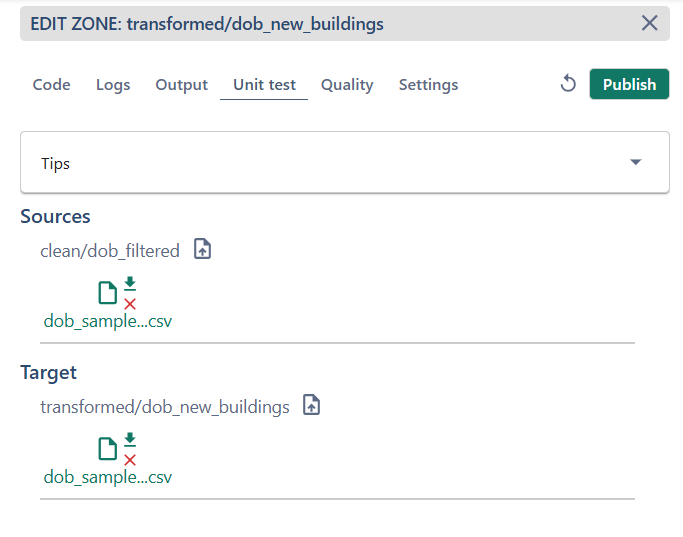

Specify data and unit tests

Define unit tests

Unit tests help ensure that data transformations follow the defined rules accurately. Upload source and target samples to validate the transformation code and confirm its correctness.

- Prepare sample files: ensure your test sample files meet the following format requirements:

- File extension:

.csv - Delimiter: use a comma (

,) - Header: the file should have a one-line header

- Quotes: avoid using quotes in the data

- File extension:

- Upload source and target files.

- Run unit test and review the logs and results to ensure the data transformations were applied correctly.

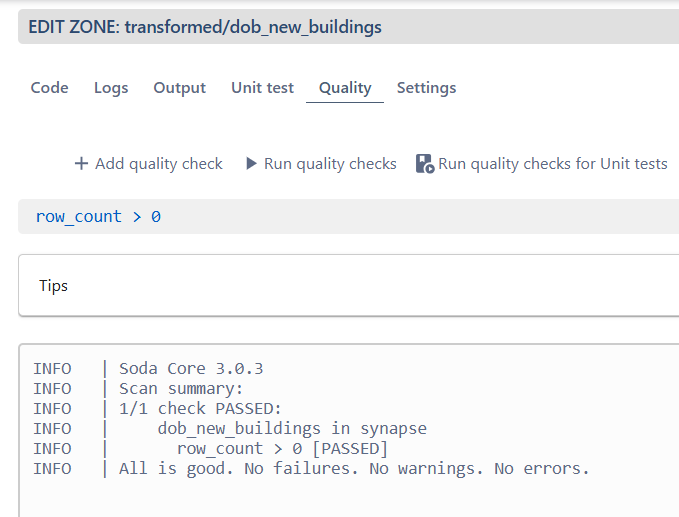

Define data quality tests

Data quality tests help you verify the reliability and integrity of your datasets after transformation. By setting specific quality rules, you can ensure that your data meets predefined standards and requirements, helping to maintain consistency and trust in your analytics pipeline.

- Define quality rules: start by defining the conditions your data must meet.

- Add quality checks.

- Run quality checks and view results: check the logs sectionf dtails about which rules passed or failed.

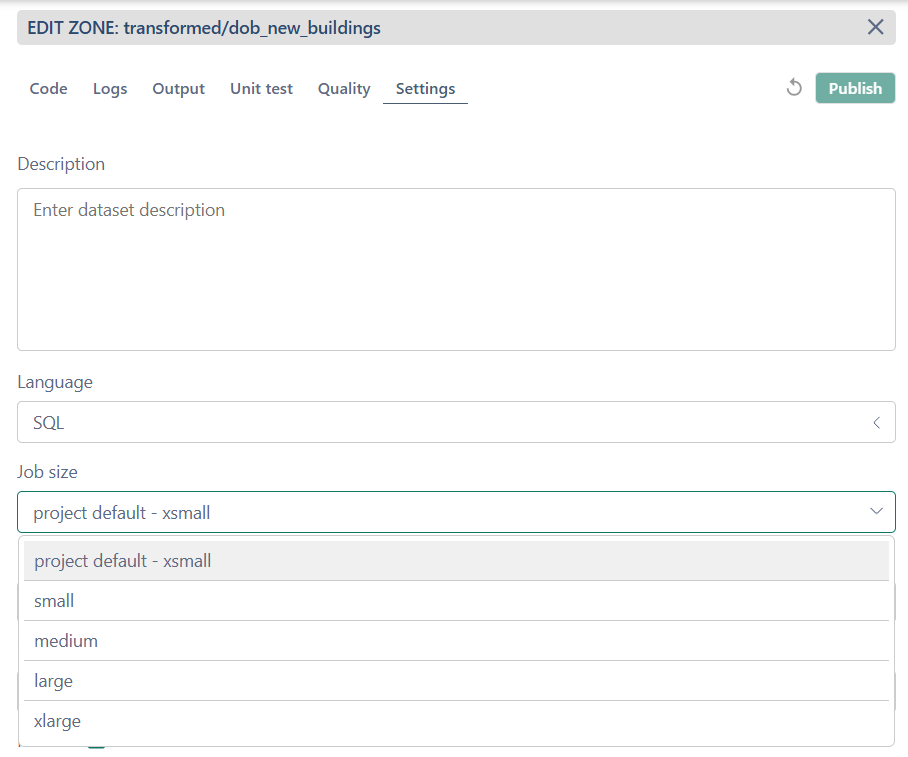

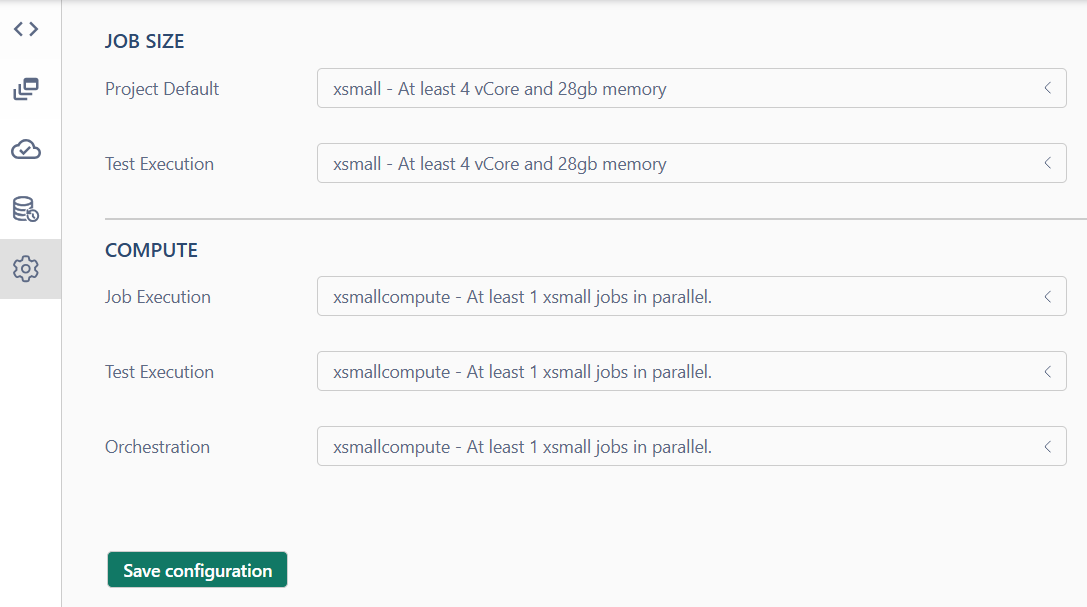

Define job size

You can configure the job size for specific datasets in the Settings tab. The project default value is defined on the Settings page and is automatically applied to all datasets unless specified otherwise.

To optimize the allocated resources, navigate to the Settings page. Here, you can adjust the default job size for the project, define the job size for test executions, and configure parallel job execution settings.

Configure orchestration

The orchestration allows you to manage and monitor the execution of workflows and data pipelines. Choose the refresh mode and save the configuration.

For more information reed orchestration article

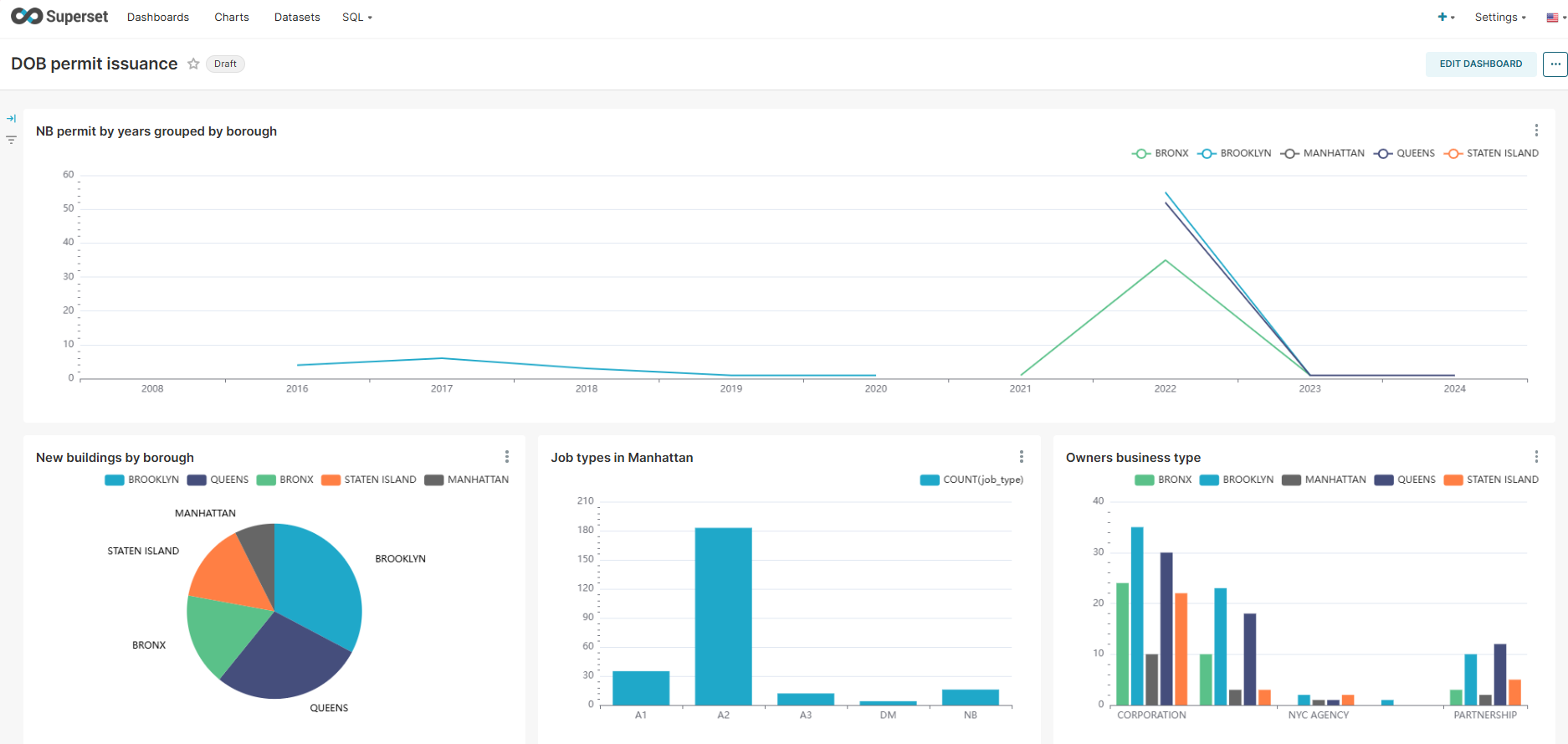

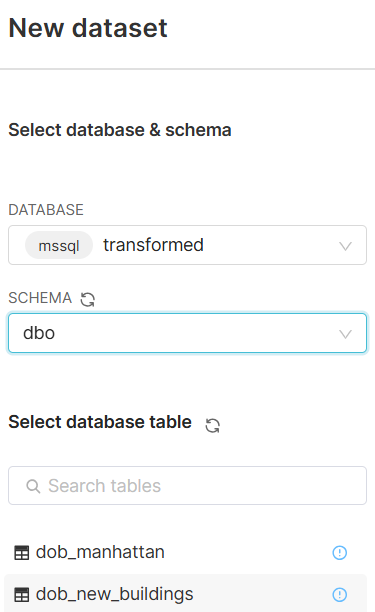

Create dashboards

- Open Infrastructure page and navigate to Superset UI

- Create a dashboard and add new charts to visualize your data

For the first time you have to Add a dataset. Select database zone and dbo schema. Choose dataset from list of tables.

Create new charts based on source data and add it to Dashboard